AI Evaluations Platform

Test and compare LLMs in one dashboard with LLM-as-a-Judge scoring, a prompt playground, and full trace logging. Get repeatable, data-driven insights for faster deployment decisions.

Trusted by innovators from top companies

Key features

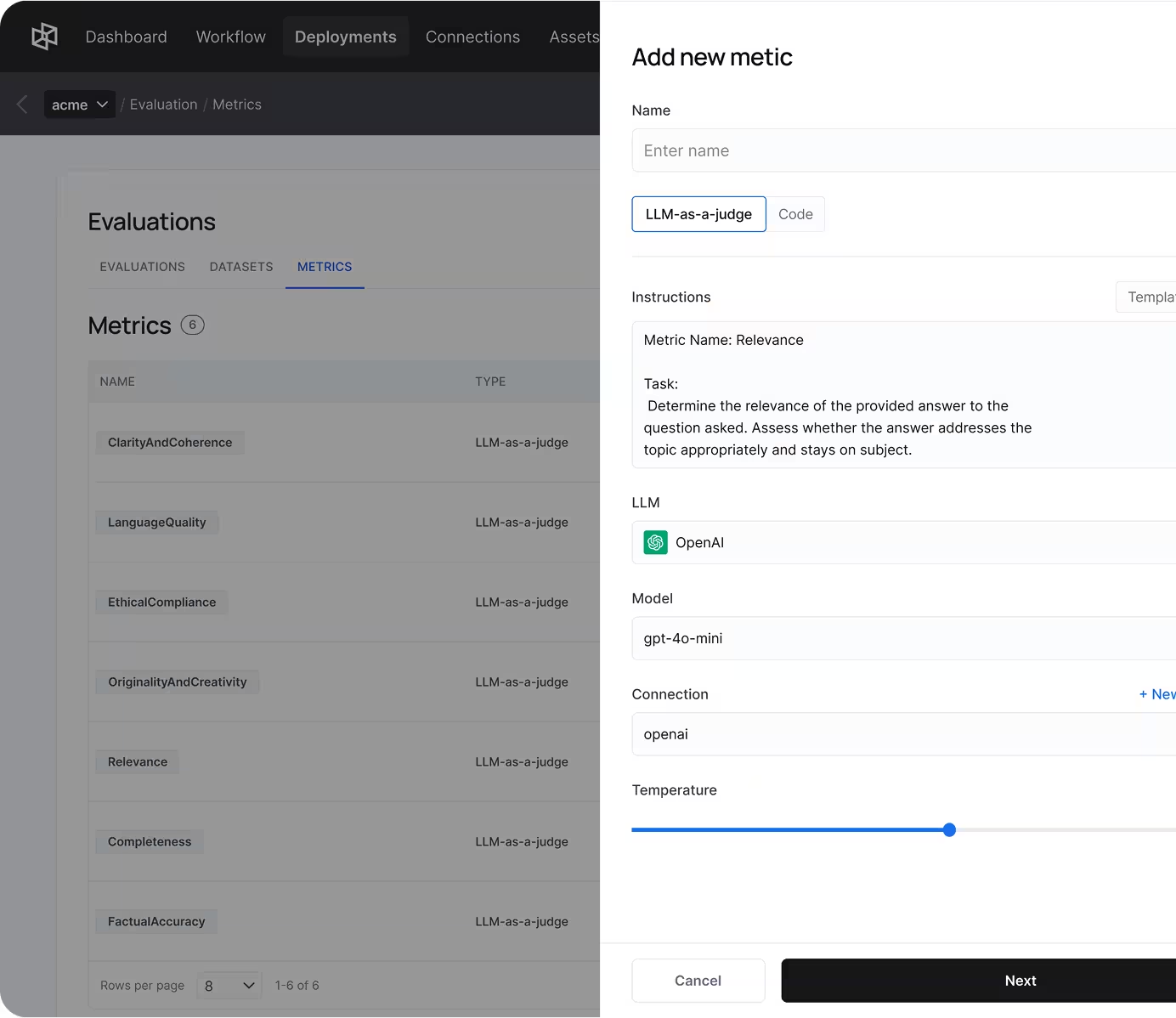

LLM-as-a-Judge Scoring

- Auto-score outputs for relevance, accuracy, style, and compliance

- Use reference answers or custom rubrics—no manual reviews

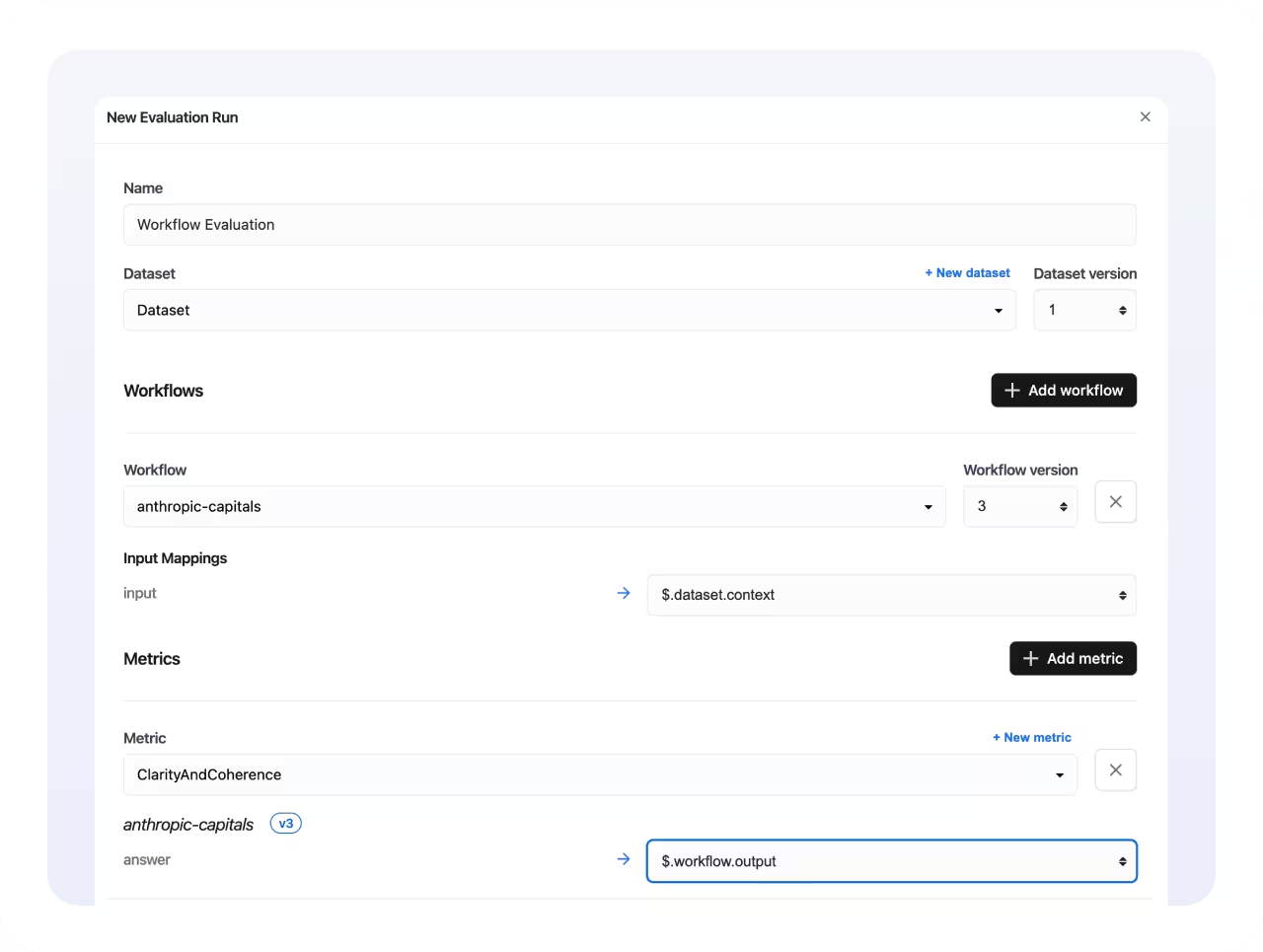

Prompt Playground & Model Comparison

- Test prompts and LLMs side-by-side in one view

- Instantly see score differences between model responses

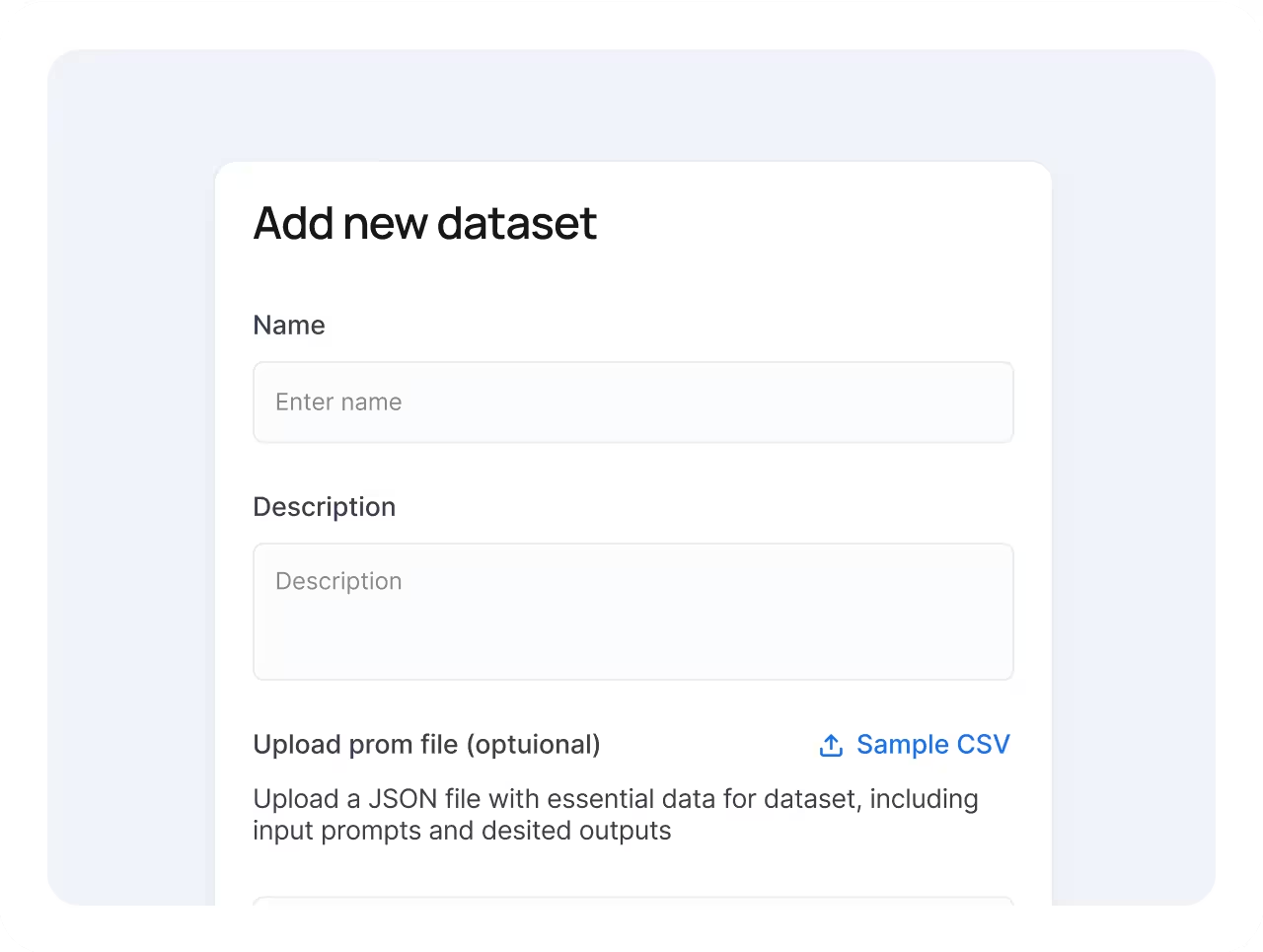

Trace-to-Dataset Saving

- Log every input, output, and score automatically

- Get quick reruns, regression tracking, and sharing across teams

Speed Up Model Selection & Time-to-Market

- Cut POC Cycles in Half. Run side-by-side model tests in minutes and surface the top-performing LLM instantly.

- Data-Driven Prompt Iteration. Refine prompts with live score deltas, reducing guesswork for engineers and PMs.

- Faster Stakeholder Sign-off. Share auto-generated eval reports that show clear winner metrics.

- Lower Experimentation Costs. Run all model tests in one workspace without paying for multiple tools or accounts.

.avif)

.avif)

Ensure Output Quality & Regulatory Compliance

- Catch Hallucinations Early. Continuous eval pipelines flag off-policy or low-confidence responses.

- Audit-Ready Traceability. Every input, output, and score is logged and versioned for easy compliance reviews.

- Custom Compliance Rubrics. Embed domain-specific rules (e.g., HIPAA, financial disclosures) into LLM-as-a-Judge scoring.

- Ongoing Regression Alerts. Schedule recurring tests that trigger notifications if quality scores dip after model updates.

Integrate with your favourite tools

An end-to-end platform to manage the full GenAI application lifecycle

AI workflow builder

Easily orchestrate multiple AI agents to handle complex workflows.

Deployment

Utilize seamless deployment options done in a few clicks.

Guardrails

Ensure reliability and full control over your GenAI applications.

Built-In Observability

Monitor GenAI applications in real time with tracing, cost tracking, and performance insights.

Chat with leading LLMs

Chat with any LLM from a single interface to find the best LLM for your use case.

Knowledge & RAG

Centralize, enhance and unlock the full potential of your data.

Fine-tuning

Fine-tune any LLM on your proprietary data.

FAQ

What are AI evaluations?

How is this different from observability?

Do I need coding skills to run evaluations?

Which models can I test?

Can I automate regression tests?

Start building your GenAI use cases

Curious to find out how Dynamiq can help you extract ROI and boost productivity in your organization? Let's chat.

.avif)