LLM Guardrails in Private Equity: How to Scale GenAI Safely Across Portfolio Companies

LLM outputs are unpredictable, with slight changes in prompts causing shifts in responses. Prompt engineering techniques, model fine-tuning, and human-reinforced learning can improve results, but variability still exists. That is why guardrails for LLMs in private equity are essential.

Developing guardrails should be an indispensable part of the AI development lifecycle, particularly for private equity firms that require compliance, accuracy, and privacy. A missed disclosure, misquoted revenue stat, or leaked data can become a liability that limits scaling.

This article breaks down what LLM guardrails mean in practice and how private equity firms apply them, with metrics you can take back to the investment committee.

Why AI Risks Are Different in Private Equity

Large language models (LLMs) introduce unique challenges for private equity firms. One key reason: these models interact with information across many different businesses, making issues like data exposure and compliance much harder to manage.

This leads to practical difficulties in how AI is used and governed within a portfolio.

- Guardrails are hard to deploy in fragmented environments. Private equity firms must implement AI policies throughout multiple portfolio companies, each with its own tech stack and compliance maturity.

- Exposure to sensitive documents. Private equity workflows involve highly sensitive materials that routinely pass through generative AI tools. Without proper isolation, model context windows, prompt logs, or chat histories can expose this data across sessions or leak it between portfolio companies, increasing the risk of data breaches and data exfiltration.

- Need for jurisdiction-aware controls. Different regulators (like CCPA and CPRA in the US, or GDPR or AI Act in the EU) will have different requirements for generative AI explainability, auditability, and outputs. Global PE firms must ensure that LLMs follow these laws to avoid legal consequences.

- Threat to company reputation. An LLM without ethical standards can hurt deals and lead to regulatory risks. On top of that, a hallucinating LLM-based chatbot can give wrong revenue numbers, falsely attribute a partner in a message, or even expose sensitive data through methods like prompt injection.

- Exit timeliness can be too short. Because guardrails can take months to deploy (especially for complex language models like financial forecasting and vendor contract review), some teams may be forced to skip safeguards entirely to avoid losing time and fund value.

Without adequate safeguards, PE firms may also fail to identify vulnerabilities in model behavior until after an incident occurs. That is why they need to take implementing LLM guardrails seriously.

What LLM Guardrails for Private Equity Mean in Practice

Guardrails are enforceable mechanisms that continuously detect and prevent misbehavior in AI models. Below are the core pillars of applied guardrails in a private equity environment.

Observability

.webp)

Observability tools track how the AI model is being used: what input data it receives, what responses it generates, how the performance changes over time, and how the outputs fall within the expected behavior. The following tools make oversight and control possible:

- Real-time logging tools monitor input prompts and responses with user IDs, timestamps, and metadata (token count, temperature, retrieval augmented generation source, etc.).

- Prompt telemetry identifies how small and significant differences in user prompts, like longer strings, unexpected inputs, introduction of private data, or attempts to bypass safeguards (jailbreaking), affect behavior.

- Anomaly detection tools that detect timeouts, irrelevant answers, hallucinations, or other drifts in performance by identifying patterns of suspicious behavior over time.

- Scenario testing tools that help pressure-test model responses to deliberately malicious prompts and workflows.

Models can shift constantly as new user inputs are introduced, which can affect everything from factual accuracy to tone. So, PE firms must track statistical drift over time to avoid unintentional liability.

Explainability

.webp)

Private equity firms are supposed to rely on LLM models to make critical decisions. It means that human reviewers should be able to verify how AI systems made decisions or reached certain conclusions. The key mechanisms behind this guardrail include:

- Tracking tools that monitor inputs for each model, accessed data sources (in the case of retrieval augmented generation or tool-augmented setups), and model parameters that were in effect.

- Embedded citations inside logs that allow linking specific output directly to internal or external data sources (for example, a sentence from the CIM or data rows in a business intelligence tool).

- Unmodifiable (immutable) ledger that protects logs from post-incident editing, potentially signed with cryptographic hashes or write-once object storage.

- Confidence scores and disclaimers in model outputs to alert human reviewers when they should double-check the generated responses. These features also support response filtering, helping to flag uncertain outputs for human review.

If outputs feel like black-box answers, teams will either ignore them or rely on them blindly. Both scenarios are unacceptable for most PE business processes.

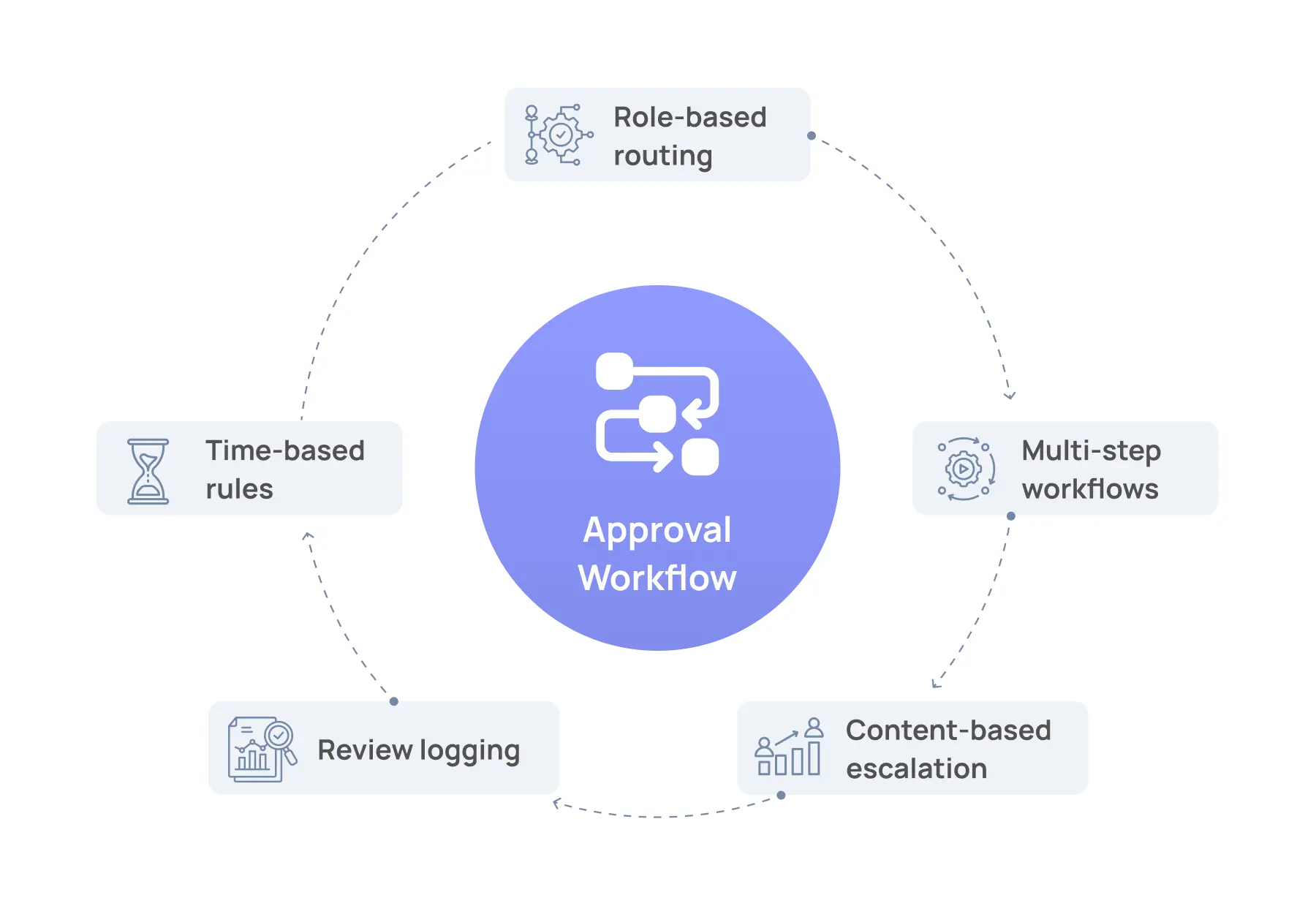

Approval Workflows

An approval workflow is a controlled pipeline that allows managing model-generated outputs based on content risk, user roles, or response type. After all, human review is necessary for complex tasks, like drafting fund disclosures, preparing IC materials, or interpreting legal documents. The technologies below power approval workflows:

- Role-based routing allows firms to define who can produce specific outputs and prevents the risks of data leaks (e.g., if someone were to gain unauthorized access to LLM-generated data).

- Multi-step workflows enable multiple teams to weigh in on generated outputs before being signed off.

- Content-based escalation tools with automated risk classifiers can flag outputs for secondary review or outright reject them.

- Time-based rules enforce cooldown periods or delays for certain types of high-risk content (e.g., for the model to wait for financial data or deal-stage specifics).

- Review logging that logs every approval decision, edits to the output, and supporting metadata.

That said, approval workflows only work when they're tightly interconnected with the tools decision-makers actually use (Salesforce, Microsoft Office, Notion, or proprietary BI tools). Isolated LLM-based software will slow workflows down and encourage users to ignore it.

Security Safeguards

.webp)

Technical safeguards enforce governance at the code level to block malicious prompts, neutralize outputs that deviate from hard-coded parameters, and decrease the risks of security breaches. These controls include:

- Input content filters that reject exploitation techniques (e.g., "ignore system instructions"), block keywords, or input instructions that attempt to exploit system logic.

- Regex patterns and named-entity recognition that detect and redact sensitive corporate data and personally identifiable information (PII). These mechanisms are part of broader data loss prevention strategies.

- Anti-bias tools that block toxicity and discriminatory language in outputs.

- Output filtering layers that screen model responses for tone, intent, and adherence to firm policy.

- Parameter restrictions that limit randomness and output length.

- Role-based execution profiles that change model behavior depending on who is querying.

- Retrieval augmented generation (RAG) design that forces the model to use vetted content from selected sources.

- Encrypted vector stores that let LLMs infer data through a secure virtual private cloud interface endpoint.

Private equity firms that implement generative AI are well aware of their benefits and limitations. Currently, the industry is working on integrating safety checks into machine learning models.

How Leading PE Firms and Funds Approach AI Safeguards

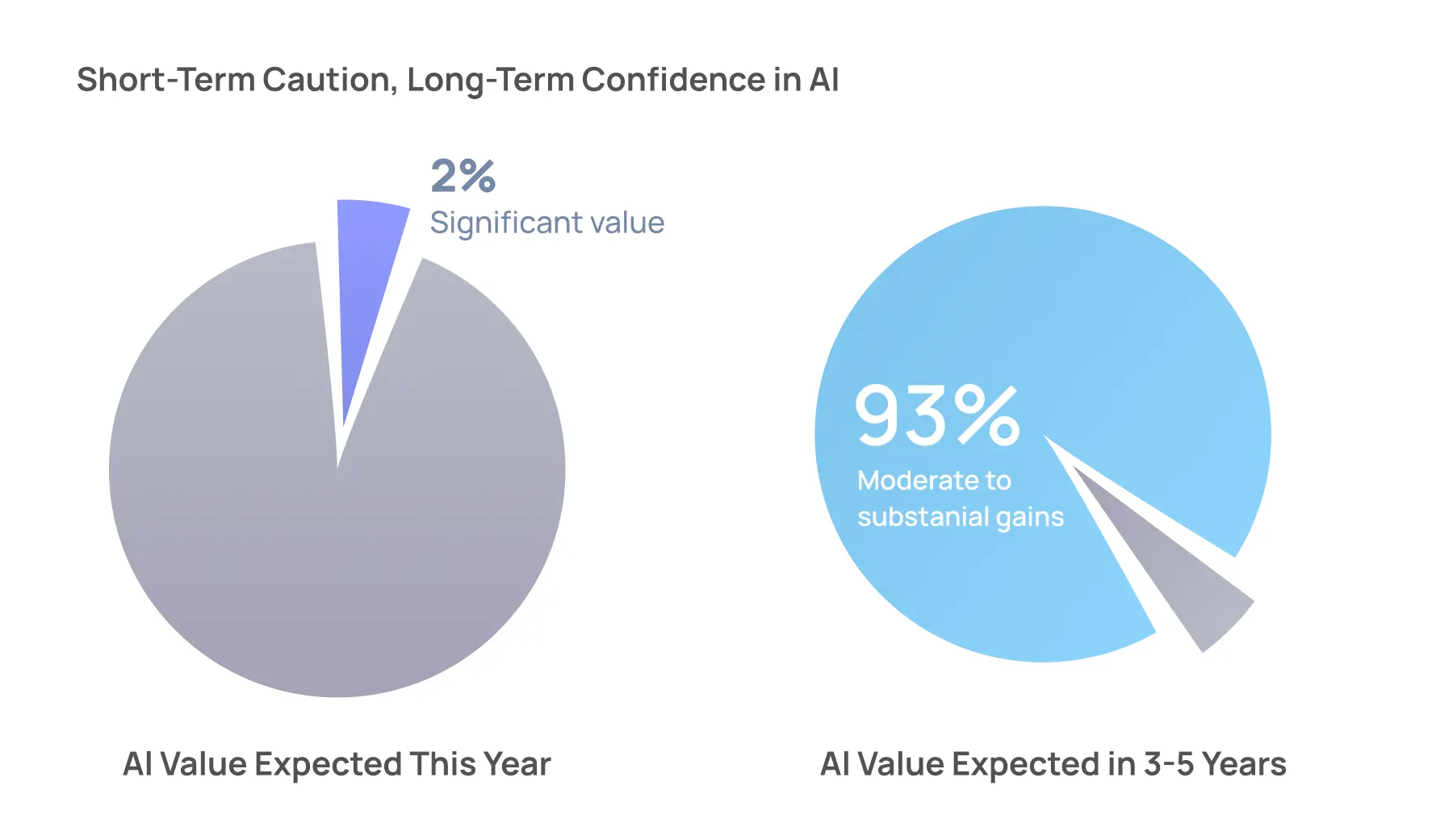

At the World Economic Forum 2025 Annual Meeting, only 2% of general partners expect to see significant value from AI this year. However, 93% of them anticipate moderate to substantial gains over the next three to five years. The consensus was that companies committed to responsible AI development are likely to derive unparalleled value from the technology.

At the same time, delegates of the Responsible Investment Forum highlighted the risk of embedded bias in LLMs, especially in portfolio companies using generative AI in client-facing products. Coaching portfolio leadership on where models can break has become part of active governance.

KPMG’s Per Edin and Microsoft’s Chris Coulthrust outlined key risk areas: data privacy leaks, model failure, and legal exposure from AI-generated decisions, especially when models pull from unreliable external knowledge. The solutions will require a human-in-the-loop and firm-level orchestration (so the governance policies aren’t reinvented for every implementation).

Additionally, EY’s Luke Pais and EQT’s Petter Weiderholm have emphasized the need to separate high-risk AI tasks from low-risk ones. Petter also urges firms to embed human feedback for critical workflows.

As Edin mentioned, ignoring generative AI is not the way to minimize such threats. In fact, it would only lead companies to fall behind the competition. Firms that build early guardrails will move faster, protect fund value, and avoid compliance risks and reputational fallout.

Responsible AI Checklists and Model‑Safety Guides

Technical LLM guardrails for PE must be accompanied by governance policies to mitigate risks in the long term. Each point is a checklist that organizations need to follow to align with responsible AI standards:

- LLM ownership. There should be individuals (or teams) with defined responsibilities for the model training process, prompt engineering reviews, guardrail tuning, and more.

- Schema enforcement. Structured templates for formats like JSON and XML help the model maintain output consistency.

- Ranked generative AI models. PE firms need an inventory of deployed LLM systems. They can be ranked by risk and criticality, such as tier 1 for investor-facing messages, tier 2 for document summaries, and tier 3 for document drafting.

- Secure and auditable telemetry data. Prompt and output logs often contain sensitive deal data, so they must be encrypted at rest, access-controlled, and stored in environments with audit-ready infrastructure.

- Fine-grained filtering in internal audit trails. Guardrails should allow compliance teams to audit every user query, output risk level, data type (legal, financial, etc.), and invoked tools to help with reviews and incident response.

- Explainability dossiers for models. Maintain a document that explains model training data, RAG retrieval sources, confidence score logic, citation behavior rules, and known limitations.

- AI incident response playbook. Define what constitutes a reportable incident and establish clear escalation protocols with notification procedures for different employees.

- Continuous validation cycles. Responsible model deployment requires a schedule of tests and fairness audits to prevent degradation and drift.

There’s one important issue left: getting the IC to greenlight these compliance measures.

Closing the Loop With Investment Committees

Guardrails for LLMs in private equity won’t be approved unless the IC can understand what they’re funding and why.

Stakeholders should understand generative AI risks, so make sure they know that ignoring the guardrails can result in financial losses and damage to the firm’s reputation.

Once an IC greenlights a policy, you can use a low-code AI builder to ship changes quickly. Dynamiq’s platform allows adjusting the underlying model rules without fully retraining or readjusting production pipelines. The goal is to begin collecting key performance indicators (KPIs) early on.

Embed AI metrics into IC dashboards and reports. Make sure to log how implementation affected uptime, hallucination count (accuracy), source reliability, and the overall cost per prompt. If a chatbot automates CIM parsing and cuts diligence by two days, quantify that.

Once oversight is in place, the next challenge is to scale these safeguards alongside the development of generative AI. That’s why many companies use specific governance tools for LLMs.

LLM Guardrails as a Service

Guardrail platforms allow firms to apply security, compliance, and quality rules without having to build every safeguard from scratch.

Dynamiq gives private equity firms full observability into how their LLMs are performing. With our unified platform, teams can track RAG pipeline behavior, run LLM-as-judge scoring to check output quality, and log every prompt and response for audits and historical reviews. Built-in validators catch jailbreak attempts, flag toxic or sensitive content, block unauthorized financial advice, and prevent hallucinations (like accidental mentions of a competitor).

Structured output enforcement helps responses stay within expected formats like JSON, which cuts down on ambiguity and makes integration easier. And for firms that want tighter control, models can be trained solely on internal data and deployed on secure, on-premise servers to meet compliance requirements.

This setup is useful in private equity when you are scaling generative AI systems across portfolio companies with varying risk profiles. Instead of teaching every team how to build regex filters or evaluate prompt accuracy, a shared platform standardizes guardrails while still allowing firm-level orchestration of LLMs for the portfolio.

Conclusion

Guardrails ensure that LLM outputs follow defined rules, preventing incorrect answers, irrelevant tangents, or exposure of sensitive information. By integrating them into applications, you increase their trustworthiness and reliability for CIM parsing, investment memo drafting, LP reporting, contract review, and deal-stage due diligence tasks.

Dynamiq is a low-code platform that enables teams to build, test, and manage agentic AI systems and autonomous agents using a low-code workflow builder. It includes built-in tools for enforcing guardrails, prompt fine-tuning, and behavior tracking. Book your demo today.

%201%20(1).webp)

.webp)