LLM Agents Explained: Complete Guide in 2025

.webp)

.webp)

Large language models (LLMs) like GPT-4, Claude, Llama, and Cohere have changed how we think about AI. These models go far beyond simple responding to prompts: they can understand, generate, and apply natural language similarly to humans. Gemini Ultra even became the first LLM to reach human-level performance on the Massive Multitask Language Understanding (MMLU) benchmark.

This evolution has opened the door to something even more advanced: LLM agents. Instead of just responding to text input, LLM agents can take action autonomously. They're already being used to write copy, dig through government datasets, generate software code, and support medical professionals.

So, what exactly are large language model agents, and how can they help your business? Read on to find out.

What are LLM Agents?

LLM-based agents are advanced AI systems that rely on large language models like GPT-4 to perform specialized tasks. Among various types of agents, LLM-based systems stand out for their ability to reason through language, use tools, and operate with a high degree of autonomy.

These agents rely on LLMs to interpret natural language, make decisions, and interact with external tools, often without needing constant human oversight.

Recognizing and using complex language patterns allows LLM agents to understand intent, detect nuance, and communicate in a way that mimics human behavior.

Compared to other agents that rely on rigid rules or structured data inputs, LLM agents offer more flexibility and versatility.

What Can LLM Agents Do?

.webp)

Contrary to popular belief, large language model agents are not the same as conversational LLMs like ChatGPT. Although both can understand natural language and mimic human communication patterns, LLMs go further because of their capabilities to:

- Perform complex tasks. To properly process user requests, LLM agents can break down tasks into manageable chunks and use external data sources and third-party tools. They can search the web, run calculations, or call APIs when necessary.

- Act independently. An LLM agent can work independently without being constantly guided by a user. It can make decisions and take action based on what it has learned and the instructions it receives. For example, if you ask it to summarize a document, it knows that it should read the document first and then create a summary.

With access to past conversations and built-in task planning, LLM agents can carry out personalized, multi-step workflows that evolve over time. They can be used to analyze stock market trends, assist in health data management, run unit tests during development, generate project plans, and even accelerate drug discovery by synthesizing research findings.

Key Components of LLM Agents

Technically speaking, an LLM agent is composed of several core components that work together to interpret, plan, and act. Each element plays a distinct role in how the agent interacts, processes input, and generates output.

.webp)

Perception

The perception component is responsible for gathering information coming from different kinds of input: text, speech, or images. The quality and breadth of the data collected directly impact the agent's ability to understand user intent and respond effectively.

Agent

The basis of the agent component is a large language model, and this is what differentiates LLM AI agents from other AI agents. The model plays a vital role in processing user input, understanding the context, and generating relevant responses.

The agent component is activated by a user prompt, and in response, the agent populates a prompt template that lists the next steps it should take and the tools available for use.

Planning Module

The planning component is responsible for breaking down complex instructions into smaller, manageable actions. It may operate in one of two ways:

- With feedback. The agent adjusts its approach based on the results of previous steps.

- Without feedback. It follows a fixed set of instructions without real-time corrections.

Memory Module

The memory module is responsible for the agent's contextual understanding, storing its past reasoning, actions, and observations.

Memory in the LLM context can be divided into two types:

- Short-term memory. Retains context from a current session.

- Long-term memory. Stores information over time, such as user preferences or past interactions.

Tools

The ability to use external tools like the Wikipedia Search API or the Code Interpreter Tool is what turns an LLM into an autonomous agent. Tools allow the agent to interact with the outside world, whether that’s by retrieving data, performing calculations, or converting between formats.

Common tool categories include:

- Data analysis tools for statistical processing or reporting

- APIs for accessing structured data or external services

- Web search tools for retrieving up-to-date information

- Text-to-speech/speech-to-text for voice-based interaction

These capabilities allow LLM agents to operate across a wide range of environments and use cases.

How Does an LLM Agent Function?

.webp)

When an LLM agent receives a task, it typically follows a process like this:

- The perception component processes the input.

- The language model determines the intent and generates an initial strategy.

- The planning module breaks the task into discrete steps.

- The memory system contributes relevant historical context or preferences.

- The agent selects the appropriate tools to complete each step.

- A response is generated and returned to the user.

- The interaction is saved for future reference, if needed.

LLM agents can take on different roles depending on how they’re designed. Let’s look at some of the most common types and how they perform tasks.

Types of LLM Agents

All LLM agents share common components, but their structure and behavior vary depending on goals and how they interact with users, systems, and external environments. We decided to group them based on how they operate and what they need to accomplish.

Task Agents

.webp)

These LLM-based agents are designed to complete a specific task end-to-end with minimal user input. Once you give them a goal, they’ll break it down, plan the steps, use tools, and generate a final output.

Interactive Agents

.webp)

Rather than completing tasks in one go, interactive agents focus on collaboration. They engage with users throughout the process: asking clarifying questions, validating progress, and incorporating feedback.

Retrieval-Augmented Agents (RAG Agents)

.webp)

These agents are designed to pull in external knowledge (usually from a private or structured knowledge base) and apply it to a user’s query. They’re especially helpful in domains like legal, finance, and healthcare, where the answers often lie in legal databases, detailed documents, or historical data.

Multi-Agent Systems

Instead of one agent doing everything, these systems assign roles to different agents that work together. One might be responsible for planning, another for data gathering, and another for synthesis.

Embedded Agents

These are LLM agents that operate within existing software systems. They're integrated into CRMs, internal dashboards, or SaaS platforms to improve specific workflows. Unlike standalone agents, they’re deeply tied to their context.

Some organizations combine several types of LLM-based agents to help different parts of the business. With the rise of low-code agent platforms like Dynamiq, it’s become much easier to prototype different agent types and see which ones work best for a given use case.

The Benefits of LLM Agents

If your team already uses large language models (e.g., to generate text), LLM agents offer a next step that adds autonomy, memory, and task execution. Here’s what organizations gain from adopting LLM-based agents:

Task Automation at Scale

LLM agents don’t just respond to prompts—they execute multi-step workflows. That makes them ideal for automating repetitive or high-volume processes like summarizing reports, analyzing customer feedback, or generating product descriptions.

Context-Aware Interactions

Thanks to short-term and long-term memory modules, LLM agents can recall conversation history, understand user preferences, and adapt their responses accordingly. This allows for more natural, human-like interaction that improves over time as agents learn.

Integration with Tools and Data Sources

Unlike basic chatbots, LLM agents have vast integration capabilities. They interact with external tool APIs, internal databases, or third-party services, can handle real-time data retrieval, make calculations, or trigger workflows across systems.

Adaptability Across Domains

Once set up, an LLM agent’s core architecture can often be reused across different departments. For instance, a single agent framework can serve both sales (lead qualification) and HR (resume screening) with different prompt templates and tools.

Overall, LLM agents bring structure, memory, and autonomy to what used to be static, prompt-by-prompt exchanges.

LLM Agents Use Cases

McKinsey reports that GenAI (and LLM agents in particular) have the potential to generate $2.6 trillion to $4.4 trillion in global business value in 63 use cases. And there are already excellent LLM agent examples that are being actively used:

- AutoGPT: a developer-focused command-line agent

- GPT-Engineer: generates entire codebases from prompts

- AgentGPT: a browser-based tool for quickly spinning up agents

Other applications include:

Customer Support

.webp)

LLM agents can respond immediately to queries, resolve issues, and guide users through processes. These capabilities have led 82% of 600 surveyed CX leaders to redefine customer care.

Zendesk has integrated LLM agents into its customer support platform, allowing businesses to automate responses to common customer inquiries. In April 2024, Zendesk announced a collaboration with Anthropic and AWS and, a month later, with OpenAI, making GPT-4o accessible to Zendesk users.

Content Creation

LLM agents can help create high-quality written content, including articles, marketing copy, and social media posts. So, it’s no wonder that 43% of over 1,000 marketers surveyed by HubSpot use AI tools for content creation.

One such solution is Copy.ai, which has integrated LLMs to help marketers produce creative content quickly.

Programming Assistance

LLM agents can support developers in many ways: by suggesting code, assisting with bug fixing, and even generating complete code snippets based on user input.

GitHub Copilot, an AI tool based on the OpenAI Codex, provides real-time code completion suggestions and snippets. And research shows that engineers using GitHub Copilot can write code up to 55% faster than with traditional methods.

Data Analysis

LLM agents can analyze extensive data sets, derive insights, and create visualizations.

NVIDIA, for example, offers a framework for developing LLM agents. These agents can interact with structured databases via SQL or APIs such as Quandl, extract necessary information from financial reports (10-K and 10-Q), and perform complex data analysis tasks.

Healthcare Support

LLM agents can support healthcare professionals by providing quick access to medical information, medical history, and treatment recommendations.

Google's Med-PaLM 2 scored 85% on medical exams, matching the level of a human expert, while GPT-4 earned 86% on the United States Medical Licensing Examination (USMLE), showcasing its potential to support clinical decision-making and education.

Practical Challenges of Using LLM Agents

While the potential of LLM agents is undeniable, building and deploying them in real-world settings comes with layers of complexity that aren’t obvious at first glance.

Reliability and Control

Like all LLM-based systems, agents can sometimes produce unreliable outputs, especially when pushed beyond their finite context length or asked to reason with incomplete information. Even with good prompt engineering and planning modules, the agent might misinterpret user intent or take unnecessary steps.

Memory Management

Short-term memory is relatively easy to handle. Long-term memory, on the other hand, is harder to get right. Storing, retrieving, and summarizing relevant context without overwhelming the agent or introducing errors remains a challenge (especially at scale).

Alignment with Business Logic

LLM agents aren’t inherently aware of company policies, compliance requirements, or domain-specific nuances. Teaching agents to behave in ways that match internal processes requires careful prompt design and sometimes fine-tuning, especially in regulated industries.

Cost and Performance Trade-Offs

Running an LLM agent can be resource-intensive. Latency and compute costs become a concern when the agent needs to reason, retrieve relevant data, and respond in real time.

That’s why many teams start small by prototyping agents in sandboxed environments before rolling them into production. Using platforms like Dynamiq helps remove some of the infrastructure burden, so you can focus on testing behavior and performance before scaling up.

LLM Agent Frameworks

Most software developers rely on LLM agent frameworks to handle orchestration, memory, and tool use and simplify what would otherwise be a complex engineering process. Here are the ones used most often:

LangChain

A popular choice for chaining prompts, tool use, and memory. LangChain supports agent-based decision-making and is especially useful for quickly assembling prototypes or building domain-specific assistants.

OpenAI’s Function Calling

While not a full framework, OpenAI’s tool and function-calling APIs let developers integrate agentic behavior into apps with minimal overhead. It’s particularly effective for lightweight agents that need to call APIs or tools mid-conversation.

CrewAI

CrewAI introduces collaboration between multiple agents, each assigned a role (like planner, researcher, or executor). This approach is useful for more complex workflows where task decomposition and communication between agents are needed.

AutoGen

Developed by Microsoft, AutoGen supports multi-agent conversations and task automation. It’s more structured and typically used in enterprise settings for data workflows or report generation that require multiple reasoning steps.

Haystack Agents

Built around retrieval-augmented generation (RAG), Haystack is a strong choice for tasks that depend on structured or document-based knowledge. It’s often used in knowledge-heavy industries like legal or research.

How to Build an LLM Agent

Creating a fully functional LLM agent involves more than just calling a model. It’s a multi-step process that connects language reasoning with planning, memory, and external systems.

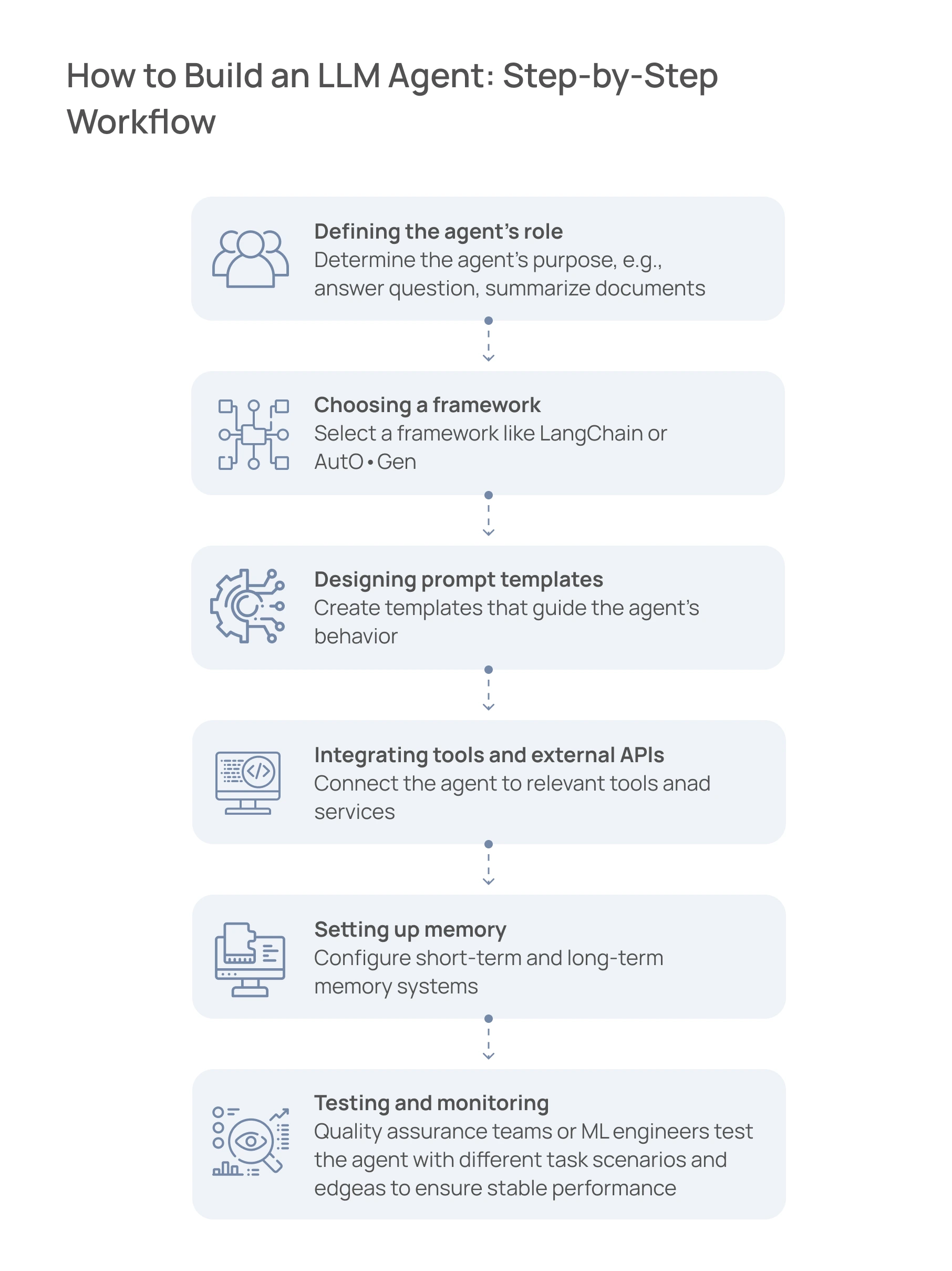

Here’s how you typically build LLM agents:

- Defining the agent’s role. The development team defines what the agent is meant to do: answer support questions, summarize documents, generate reports, write code, etc.

- Choosing a framework. Engineers select a framework like LangChain, AutoGen, or CrewAI to avoid building the agent’s orchestration logic from scratch.

- Designing prompt templates. Prompt engineers create structured templates that guide the agent’s behavior, specify which tools it can use, and outline how it should approach and execute tasks.

- Integrating tools and external APIs. Developers connect the agent to the necessary tools and services (e.g., search engines, code interpreters, or internal CRM systems) based on the agent’s use case.

- Setting up memory. The team configures short-term memory for maintaining context during interactions and long-term memory to store user preferences or historical data.

- Testing and monitoring. Quality assurance teams or ML engineers test the agent with different task scenarios and edge cases to ensure stable performance. They also add monitoring tools, logs, and fallback mechanisms to catch and handle failures during deployment.

With so many steps, building an LLM agent often requires weeks of work across product, engineering, and data teams. It also introduces overhead, especially as requirements evolve. And while agent frameworks are great for full customization, they also require time, technical expertise, and maintenance.

How We Can Help

Dynamiq offers a different approach. It’s a low-code platform designed for teams that want to build, test, and deploy LLM agents quickly. You can prototype workflows, connect your own tools or data sources, and switch between language models like GPT-4, Claude, or LLaMA from one interface.

Whether you're experimenting with agent architectures or need to roll out something production-ready, Dynamiq can reduce setup time and let your team focus on outcomes rather than infrastructure.

Conclusion

LLM agents extend the capabilities of large language models by enabling them to plan, take action, use tools, and remember context across tasks. They can do much more than just answer questions: they help automate workflows, support decision-making, and interact with complex systems.

As tools and frameworks continue to evolve, LLM agents are likely to become a standard part of how intelligent software is designed and deployed. So don't hesitate. Book a demo, and we'll show you just how easy it is to create LLM agents with Dynamiq.

.webp)